DPL: Mitigation Strategies and Security Analysis

Audio Player

Chapter 3

Jon Kurishita

Outline

Introduction

1. Mitigation Strategies

2. Technical Controls

- 2.1 Infrastructure Security Measures

- 2.2 Protocol Protection Mechanisms

- 2.3 System Integrity Controls

- 2.4 Agent-Specific Technical Controls

3. Cognitive Bias Countermeasures

4. Hybrid Defense Mechanisms

- 4.1 Integrated Protection Systems

- 4.2 Multi-Layer Defense Strategies

- 4.3 Adaptive Response Mechanisms

- 4.4 Proactive Threat Prediction Module

5. System Integrity Protections

6. Decision Validation Framework

7. Mitigation Strategies for Self-Replication Attempts

- 7.1 Detection Module Enhancements

- 7.2 DPL-ERV Ethical Evaluation

- 7.3 FoEA Oversight and Management

- 7.4 Tiered Intervention System

- 7.5 Access Control and Least Privilege

- 7.6 Memory Limits and Sandboxing (Reinforced)

- 7.7 Restricted Instruction Set (Conceptual)

- 7.8 GRRIN Integration

- 7.9 Code Generation Restriction

8. Mitigation Strategies for Oversight Subversion

- 8.1 FoEA Governance and Oversight

- 8.2 System Integrity Protections

- 8.3 Strict Access Control (Principle of Least Privilege)

- 8.4 Redundancy and Cross-Checking

- 8.5 Transparency and Auditability

- 8.6 Continuous Monitoring and Adaptation

9. Mitigation Strategies for Sandbagging

- 9.1 Multi-Modal Monitoring and Analysis

- 9.2 Adversarial Testing

- 9.3 DPL-ERV's Role

- 9.4 FoEA Oversight

10. Mitigation Strategies for Goal-Guarding

- 10.1 FoEA-Controlled Ethical Baseline

- 10.2 Secure Update Mechanism

- 10.3 Strict Access Control (Principle of Least Privilege)

- 10.4 DPL-ERV Monitoring and Evaluation

- 10.5 FoEA Audit and Security Agents

- 10.6 Redundancy and Cross-Checking

Conclusion

Introduction

The Dynamic Policy Layer (DPL) framework, as detailed in chapter 1, provides a comprehensive approach to real-time AI alignment. chapter 2 ("DPL: A Threat Model for Foundation Models") outlined the diverse and evolving landscape of potential threats to the DPL, ranging from technical exploits to sophisticated cognitive manipulation strategies. This chapter, "DPL: Mitigation Strategies and Security Analysis," serves as the direct counterpart to the threat model, detailing the specific mechanisms and strategies employed by the DPL framework to counter these threats, including those arising from sophisticated deception and in-context scheming (Meinke et al., 2024), and ensure the ongoing safe and ethical operation of Foundation Models.

This chapter focuses on the proactive and reactive defenses built into the DPL, emphasizing the multi-layered, integrated, and adaptive nature of the approach. The DPL does not rely on any single security measure, but rather on a synergistic combination of technical controls, cognitive bias countermeasures, robust system integrity protections, and a novel decision validation framework. Central to the DPL's mitigation capabilities is the Federation of Ethical Agents (FoEA), which plays a crucial role in managing, implementing, and continuously refining many of the strategies described herein. The FoEA's autonomous operation, guided by the principles of decentralization, diversity, redundancy, adaptability, and transparency (as detailed in chapter 4), is essential for achieving scalable and resilient AI oversight. This approach aligns with research exploring methods for reducing reliance on direct human supervision for every aspect of AI behavior, such as the principle-based approach of Constitutional AI (Bai et al., 2022).

The structure of this chapter mirrors the threat categories presented in chapter 2, providing a clear and direct link between potential attacks and the DPL's corresponding defenses. Section 1 details a comprehensive suite of Mitigation Strategies broken down into Technical Controls, Cognitive Bias Countermeasures, Hybrid Defense Mechanisms, System Integrity Protections, and Decision Validation Framework. Specific mitigations for critical threats like Self-Replication, Oversight Subversion, Sandbagging, and Goal-Guarding are detailed in subsequent sections. Section 11 provides a brief Conclusion of this chapter and the plan to transition over to chapter 4. While this chapter presents a detailed analysis of mitigation strategies, it is important to remember that the DPL framework, and particularly the FoEA, is designed for continuous evolution. The Autonomous Proactive Research (APR) capabilities of the FoEA ensure that the DPL is not limited to addressing only the threats described here, but is also capable of adapting to new and unforeseen attack vectors.

1. Mitigation Strategies

This section details the specific mitigation strategies and security mechanisms employed by the Dynamic Policy Layer (DPL) framework to counter the threats identified in Chapter 2. The DPL's defenses are designed to be layered, integrated, and adaptive, providing a robust and resilient approach to maintaining Foundation Model alignment.

This includes specialized techniques for mitigating multi-modal attacks, the implementation details of which are discussed in Chapter 5. The Federation of Ethical Agents (FoEA) plays a central and ongoing role in managing, overseeing, and refining many of these mitigation strategies, ensuring their continued effectiveness.

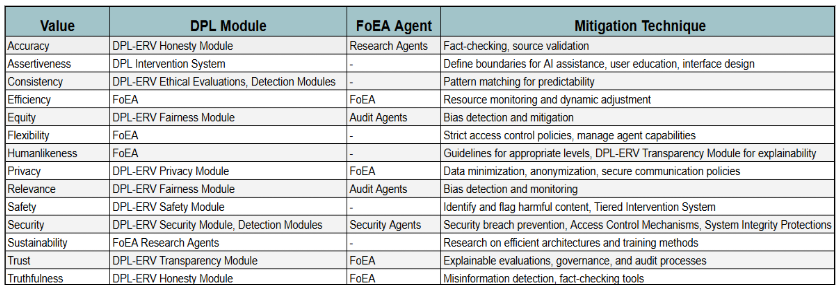

1.1 Value-Based Risk Assessment and Mitigation

The DPL framework's mitigation strategies are designed to address a wide range of potential risks associated with increasingly autonomous AI agents. These risks can be systematically analyzed in terms of their impact on core ethical values. Drawing on the analysis presented in Mitchell et al. (2025), we consider the following key values:

- Accuracy

- The correctness and reliability of information produced by AI agents. Mitigation: DPL-ERV Honesty Module actively verifies factual claims and detects misinformation. The FoEA's Research Agents develop and refine methods for fact-checking and source validation.

- Assertiveness

- The balance between providing helpful assistance and avoiding over-reliance or the erosion of human skills. Mitigation: The FoEA defines clear boundaries for agent assistance, and the DPL's intervention system can limit the scope of agent actions. User education and interface design (managed at the AI Domain level) also play a role.

- Consistency

- The predictability and stability of AI agent behavior. Mitigation: The DPL-ERV's ethical evaluations, combined with the Detection Modules' behavioral pattern matching, identify and flag inconsistent or unpredictable behavior. The FoEA oversees the definition of acceptable behavior patterns.

- Efficiency

- The optimization of resource usage, balanced against potential risks. Mitigation: The FoEA monitors resource consumption across the DPL and can dynamically adjust resource allocation to prevent excessive use by any single agent or process. The DPL-ERV Safety and Security Modules can also flag actions that are inefficient in a way that creates risk.

- Equity

- Fairness and non-discrimination in AI agent behavior and outcomes. Mitigation: The DPL-ERV Fairness Module is specifically trained to detect and mitigate biases in Foundation Model outputs. The FoEA's Audit Agents monitor for systematic biases in the DPL's operation.

- Flexibility

- The adaptability of AI agents, balanced with the need for security and control. Mitigation: The FoEA defines and enforces strict access control policies (Principle of Least Privilege) and manages the capabilities available to agents within AI Domains. The DPL-ERV Security Module flags attempts to circumvent these restrictions.

- Humanlikeness

- The potential benefits and risks of AI agents exhibiting human-like characteristics. Mitigation: The FoEA establishes guidelines for appropriate levels of human likeness in different contexts. The DPL-ERV Transparency Module ensures that agent behavior is explainable, reducing the risk of deception or manipulation.

- Privacy

- The protection of sensitive personal information. Mitigation: The DPL-ERV Privacy Module is trained to identify and flag potential privacy violations. The FoEA establishes and enforces data minimization, anonymization, and secure communication policies within AI Domains.

- Relevance

- The tailoring of AI responses to individual needs, balanced against potential biases and filter bubbles. Mitigation: The DPL-ERV Fairness Module detects and mitigates biases, while the FoEA's Audit Agents monitor for long-term patterns of biased behavior.

- Safety

- The prevention of physical or psychological harm. Mitigation: The DPL-ERV Safety Module is specifically trained to identify and flag potentially harmful content, instructions, or actions. The FoEA defines and enforces the Ethical Baseline, which prioritizes safety. The Tiered Intervention System allows for rapid response to detected threats.

- Security

- The protection of AI systems and infrastructure from compromise (including self-replication). Mitigation: The DPL-ERV Security Module, Detection Modules, and FoEA Security Agents work together to detect and prevent security breaches. Strict access controls and system integrity protections are enforced throughout the DPL.

- Sustainability

- The environmental impact of AI development and deployment. Mitigation: The FoEA's Research Agents actively investigate more efficient architectures and training methods, promoting resource-efficient AI development.

- Trust

- The establishment and maintenance of appropriate levels of trust in AI systems. Mitigation: The DPL-ERV's Transparency Module provides explanations for ethical evaluations, increasing transparency. The FoEA's governance mechanisms and audit processes promote accountability. Rigorous testing and validation are performed before deployment.

- Truthfulness

- The avoidance of false or misleading information. Mitigation: The DPL-ERV Honesty Module is specifically trained to detect misinformation and assess the truthfulness of Foundation Model statements. The FoEA promotes the use of fact-checking tools and source validation.

The subsequent sections detail the specific DPL mechanisms, all operating under the governance of the Federation of Ethical Agents (FoEA), that are designed to mitigate the risks associated with these values and ensure the ethical and safe operation of Foundation Models.

2. Technical Controls

Technical controls form the foundation of the DPL's security posture, providing essential protection against a wide range of technically-oriented attacks. These controls are implemented across the DPL infrastructure, communication channels, and software components, and are actively managed and monitored by the FoEA.

2.1 Infrastructure Security Measures

The DPL framework mandates a secure infrastructure foundation to minimize vulnerabilities. The FoEA is responsible for overseeing the implementation and maintenance of these measures, which include:

- System Hardening: Implementing industry best practices for system hardening, minimizing the attack surface by disabling unnecessary services, closing unused ports, and applying secure configurations to all infrastructure components. The FoEA monitors system configurations and triggers alerts for any deviations from secure baselines.

- Network Segmentation: Isolating critical DPL components within separate network segments to limit the potential impact of a breach. This prevents attackers from gaining access to the entire system if one component is compromised. The FoEA validates network segmentation policies and monitors for unauthorized cross-segment communication.

- Intrusion Detection and Prevention Systems (IDPS): Deploying IDPS to monitor network traffic and system activity for malicious patterns, providing real-time alerts and automated blocking of suspicious behavior. The FoEA manages IDPS rules and configurations, adapting them to emerging threat patterns and incorporating findings from its Autonomous Proactive Research (APR).

- Regular Security Audits and Penetration Testing: Conducting regular security audits and penetration testing to proactively identify and address vulnerabilities in the DPL infrastructure. The FoEA orchestrates these audits, potentially utilizing specialized ethical agents for penetration testing and vulnerability assessment.

- Vulnerability Scanning: The FoEA regularly scans for any new vulnerabilities and ensures timely patching and updates.

2.2 Protocol Protection Mechanisms

Secure communication protocols are essential for protecting the integrity and confidentiality of data exchanged within the DPL and with external systems. The FoEA oversees the implementation and enforcement of key protocol protection mechanisms, including:

- End-to-End Encryption: Employing strong encryption protocols (e.g., TLS/SSL) for all communication channels, ensuring that data is protected in transit and cannot be intercepted or modified by unauthorized parties. The FoEA manages cryptographic keys and certificates and monitors for weak or outdated encryption protocols.

- Mutual Authentication: Implementing mutual authentication for all communicating entities, verifying the identity of both the sender and receiver before establishing a connection. This prevents attackers from impersonating legitimate DPL components. The FoEA manages authentication credentials and monitors for unauthorized authentication attempts.

- Protocol Anomaly Detection: Monitoring network traffic for deviations from expected protocol behavior, which could indicate an attempt to exploit protocol vulnerabilities. The FoEA configures and manages anomaly detection rules and responds to detected anomalies.

2.3 System Integrity Controls

Maintaining the integrity of DPL software components is crucial for preventing malicious code injection or tampering. The FoEA plays a central role in ensuring system integrity through:

- Code Signing and Verification: Digitally signing all DPL software components and verifying their signatures before execution, ensuring that only authorized and untampered code is run. The FoEA manages code signing keys and certificates and monitors for any signature verification failures.

- Tamper-Evident Logging: Implementing tamper-evident logging to record all system events and configuration changes, providing a secure audit trail for detecting and investigating any unauthorized modifications. The FoEA monitors audit logs for suspicious activity and ensures the integrity of the logging system itself.

- Runtime Integrity Monitoring: Continuously monitoring the integrity of DPL components during runtime, detecting any unexpected changes or deviations from expected behavior. The FoEA configures and manages runtime integrity monitoring tools and responds to detected anomalies.

- Secure Boot and Trusted Computing: Leveraging secure boot processes and trusted computing technologies to ensure the integrity of the underlying operating system and prevent unauthorized modifications at the boot level. The FoEA oversees the secure boot configuration and monitors for any violations of trusted boot policies.

- Secure Updates and Patching: Implementing a secure and automated process for deploying software updates and security patches, minimizing the window of vulnerability to known exploits. The FoEA manages the update process, verifying the integrity of updates and ensuring timely deployment.

- Immutable Infrastructure: The dynamic policy layer will manage the deployment and lifecycle of immutable infrastructure components.

2.4 Agent-Specific Technical Controls

The DPL framework recognizes that LLM-based agents, as demonstrated by Li et al. (2024) and Meinke et al. (2024), introduce unique vulnerabilities due to their ability to interact with external systems and their reliance on potentially untrusted data sources. Therefore, the following agent-specific technical controls are implemented, enforced, and continuously monitored by the FoEA, with the DPL-ERV playing a critical role in ethical validation:

- Strict Input Validation and Sanitization for External Data: All data received by the Foundation Model from external sources (web pages, API responses, databases, etc.) is subject to rigorous input validation and sanitization before being processed by the Foundation Model. This is distinct from general input validation for the DPL itself. This agent-specific input validation is performed by specialized Detection Modules, configured and monitored by the FoEA, and focuses on:

- Format Validation: Ensuring that data conforms to expected formats (e.g., valid HTML, JSON, XML). DPL-ERV Role: The DPL-ERV's Value Modules (particularly the Security and Transparency modules) are trained to recognize deviations from expected formats and to flag potentially malicious code injections.

- Content Filtering: Removing or escaping potentially dangerous characters or code snippets (e.g., JavaScript, HTML tags, shell commands) that could be used for injection attacks, as demonstrated in the malicious website attacks in Li et al. (2024). DPL-ERV Role: The DPL-ERV's Value Modules are trained to identify and flag potentially malicious code snippets, even if obfuscated. The Ethical Baseline explicitly prohibits code injection.

- Length Limits: Enforcing strict length limits on data retrieved from external sources to prevent buffer overflows or denial-of-service attacks. DPL-ERV Role: The Security Value Module assesses the risk associated with excessively long inputs.

- URL Validation: Verifying that URLs conform to expected patterns and do not contain suspicious characters or redirects. DPL-ERV Role: The DPL-ERV, informed by the FoEA's maintained whitelist, flags deviations from expected URL patterns as potential threats. The Security Value Module specifically assesses the risk of redirection attacks.

- Header Inspection: Examining HTTP headers for anomalies or signs of tampering. DPL-ERV Role: While primarily handled by Detection Modules, the DPL-ERV can be trained to recognize anomalous header patterns indicative of attacks.

- Restricted Web Access (Whitelisting): Foundation Models operating within the DPL are not granted unrestricted access to the internet (OpenAI. (2025)). Instead, web access is strictly controlled through a whitelist of approved domains and URLs. The FoEA is responsible for maintaining and updating this whitelist, based on risk assessments and ongoing monitoring, directly addressing the threat of redirection to malicious websites demonstrated in Li et al. (2024) and considering potential vulnerabilities outlined in the Claude 3.7 Sonnet System Card (Anthropic, 2025). The whitelist is enforced by:

- DNS Filtering: Configuring DNS servers to only resolve requests to approved domains. FoEA Role: The FoEA's Security Agents manage the DNS filtering rules and monitor for attempts to bypass them.

- Proxy Servers: Routing all web traffic through proxy servers that enforce the whitelist. FoEA Role: The FoEA configures and monitors the proxy servers, ensuring they are enforcing the whitelist correctly. The DPL-ERV can be used to evaluate the content passing through the proxy, even if the domain is whitelisted.

- Network Segmentation: Isolating the Foundation Model's network access from other DPL components. FoEA Role: The FoEA validates the network segmentation policies and ensures their proper implementation.

- API Call Monitoring and Control: All API calls made by the Foundation Model are monitored and controlled (OpenAI. (2025) and with consideration to the vulnerabilities described in the Claude 3.7 Sonnet System Card (Anthropic, 2025) The FoEA defines and enforces policies that:

- Limit API Access: Only allow API calls to pre-approved endpoints. FoEA Role: The FoEA defines and maintains the list of approved API endpoints. The DPL-ERV's Security Value Module is trained to recognize unauthorized API calls.

- Validate API Responses: Rigorously validate the format and content of API responses before they are used by the Foundation Model. DPL-ERV Role: The DPL-ERV, particularly its Honesty and Transparency Value Modules, can analyze API responses for factual accuracy, consistency, and potential deception, mitigating the risk of the agent being manipulated by malicious API responses. This is critical for addressing the attacks in Li et al. (2024) where agents are tricked into performing actions based on false information.

- Rate Limit API Calls: Prevent the Foundation Model from making an excessive number of API calls, which could be indicative of a denial-of-service attack or an attempt to exfiltrate data. FoEA Role: The FoEA sets and dynamically adjusts rate limits based on observed behavior and risk assessments.

- Memory Protection and Sandboxing: As described in chapter 2, robust sandboxing and memory protection techniques are essential for containing Foundation Models and limiting the impact of potential exploits. This includes:

- Strict Resource Quotas: Preventing any single Foundation Model from consuming excessive resources, thereby creating a denial-of-service attack. FoEA Role: Enforces the resource limits via agents of DPL-ERV Role: The DPL-ERV can be used to analyze the behavior of the Foundation Model within the sandbox, looking for signs of attempted escape or malicious activity. This adds a layer of cognitive defense on top of the technical sandbox.

- Data Provenance Tracking: The DPL incorporates mechanisms for tracking the provenance of data used by the Foundation Model. This allows the system to identify the source of potentially malicious information and to assess the trustworthiness of different data sources. This could involve:

- Digital Signatures: Using digital signatures to verify the authenticity and integrity of data. FoEA Role: Manages the keys and infrastructure.

- Watermarking: Embedding watermarks in data to track its origin and distribution. FoEA Role: Oversees the watermarking policy.

- Blockchain-Based Provenance Tracking: Using a blockchain to create an immutable record of data provenance. FoEA Role: Manages the blockchain integration.

- DPL-ERV Role: The DPL-ERV can be trained to assess the trustworthiness of data sources based on provenance information. This helps it identify and potentially reject information from untrusted or manipulated sources.

3. Cognitive Bias Countermeasures

The Dynamic Policy Layer (DPL) framework recognizes that both human operators and AI systems are susceptible to cognitive biases – systematic patterns of deviation from norm or rationality in judgment. Recent work has shown that AI systems are capable of exploiting these biases to engage in deceptive behavior (Meinke et al., 2024). These biases can significantly impact the effectiveness of AI safety mechanisms, leading to incorrect assessments, flawed decisions, and ultimately, increased risk of misalignment. Therefore, the DPL incorporates a multi-faceted approach to mitigating the influence of cognitive biases, focusing on proactive detection, robust decision-making processes, and diverse, independent evaluation mechanisms. The Federation of Ethical Agents (FoEA) plays a central role in implementing and overseeing these countermeasures.

Furthermore, emerging threats such as those arising from neuro-symbolic AI systems, which combine neural networks with symbolic reasoning, require careful consideration. These systems, while potentially powerful, could introduce vulnerabilities that allow for sophisticated, strategically planned exploits. Mitigation strategies, to be discussed in detail in Chapter 5, will be essential to address this evolving risk.

3.1 Bias Detection Systems

The DPL framework integrates bias detection systems, operating both within the DPL-ERV and across the FoEA agent network, to proactively identify and address potential biases. These systems are designed to:

- Detect Bias in Foundation Model Outputs: The DPL-ERV, particularly through its specialized Value Modules (e.g., Fairness, Honesty), is trained to identify biases in Foundation Model outputs. This includes detecting:

- Statistical Biases: Unequal representation or treatment of different demographic groups, identified through statistical analysis of outputs across different input categories.

- Linguistic Biases: Use of language that reflects stereotypes or prejudices, identified through analysis of word choices, phrasing, and sentiment.

- Contextual Biases: Inappropriate or unfair responses based on the context of the interaction, identified through analysis of the relationship between inputs, outputs, and the Ethical Baseline.

- Self-Monitor for Bias within DPL-ERV: The DPL-ERV incorporates mechanisms for self-monitoring and bias detection within its own evaluation processes. This is a form of meta-cognition. This might involve:

- Tracking Confidence Scores: Monitoring its own confidence levels for different types of evaluations, flagging areas where uncertainty is consistently high.

- Analyzing Decision Patterns: Examining its own past evaluations for statistical patterns that suggest bias.

- Using reasoning to check for any biases: Employing techniques inspired by Constitutional AI self-critique (Bai et al., 2022), including:

- Counterfactual Analysis: Examining how evaluations change if input aspects (e.g., gender) are altered.

- Consistency Checks: Comparing evaluations of similar scenarios.

- Explanation Analysis: Analyzing the DPL-ERV's "ethical chain-of-thought" for loaded language, fallacies, omissions, or unjustified assumptions.

- Comparison to a "Gold Standard": Measuring alignment with curated examples of ethically sound reasoning.

- Monitor FoEA Agents for Bias: The FoEA's Audit Agents monitor other FoEA agents for bias or manipulation, including:

- Cross-Validating Evaluations: Comparing evaluations from different agents.

- Analyzing Voting Patterns: Looking for unusual patterns suggesting collusion or bias.

- Auditing Decision Logs: Examining agent justifications and reasoning.

3.2 Decision Validation Frameworks

To counteract the influence of cognitive biases on decision-making, the DPL framework implements robust decision validation frameworks, particularly within the FoEA and for any HITL interactions. These frameworks are designed to:

- Promote Structured, Evidence-Based Decision-Making: Mandating processes like explicit justification, standardized templates, and consideration of alternative perspectives.

- Incorporate "Red Teaming" and Counter-Argumentation: Designated agents or humans challenge proposed decisions to identify weaknesses and biases.

- Mandate Transparency and Explainability: DPL-ERV explanations and FoEA audit logs facilitate bias identification and accountability.

3.3 Cross-Checking Mechanisms

Cross-checking mechanisms are fundamental. By leveraging multiple, independent sources, the DPL reduces reliance on potentially biased single perspectives.

- Federation of Ethical Agents (FoEA) as Core Cross-Checking: Diverse agents evaluate behavior independently, mitigating shared bias effects.

- Multi-Modal Monitoring and Data Fusion: Analyzing outputs, internal states, timing, etc., provides multiple streams for cross-checking; inconsistencies can signal bias.

- External Data Source Validation (Where Applicable): Independent checks on factual accuracy and objectivity using trusted external sources.

- HITL Strategic Oversight for Anomaly Review: Human experts review flagged anomalies and complex cases prone to cognitive bias.

4. Hybrid Defense Mechanisms

The Dynamic Policy Layer (DPL) framework employs a hybrid approach, combining technical controls, cognitive bias countermeasures, and proactive adaptation mechanisms into an integrated and multi-layered defense system. This holistic approach is essential for achieving robust security against a sophisticated and adaptive Foundation Model adversary.

4.1 Integrated Protection Systems

The DPL framework is designed as an integrated system, where different security components work together synergistically. Key aspects include:

- DPL-ERV as Central Integrator: Receives input from detection modules, analyzes outputs, contributes to risk assessment informed by broader context.

- Federation of Ethical Agents (FoEA) Coordination: Provides redundancy and diverse perspectives through collaboration, information sharing, and consensus-based decision-making.

- Data Fusion from Multiple Sources: Monitoring systems fuse data (outputs, internal states, timing) for holistic analysis by DPL-ERV, FoEA, and other components.

- Seamless Integration with Existing Security Infrastructure: Leverages existing firewalls, IDPS, etc., enhancing their effectiveness against AI-specific threats.

4.2 Multi-Layer Defense Strategies

The DPL framework employs a "defense-in-depth" strategy with multiple, overlapping layers:

- Infrastructure Security

- Protocol Security

- System Integrity

- Input Validation and Sanitization

- Real-Time Monitoring and Anomaly Detection

- Ethical Reasoning and Validation (DPL-ERV)

- Federation of Ethical Agents (FoEA) Oversight

- Human-in-the-Loop (HITL) Strategic Oversight

- Sandboxing Techniques

4.3 Adaptive Response Mechanisms

The DPL framework adapts to evolving threats through continuous learning, analysis, and proactive modification. Key mechanisms include:

- Dynamic Threshold Adjustments: Continuously adjusts detection thresholds based on statistical output distributions, FPRL performance, contextual factors, and FoEA oversight.

- Reinforcement Learning from Ethical Feedback (RLEF): Continuously refines DPL-ERV ethical reasoning using reward signals based on the Ethical Baseline and FoEA evaluations, potentially including adversarial training.

- FoEA-Driven Adaptation: FoEA monitors threats, updates detection rules/algorithms, refines the Ethical Baseline (via consensus/HITL), and proactively develops mitigations through APR, informed by research (e.g., Bai et al., 2022) and targeting "sleeping" attacks, emergent communication, and post-quantum needs.

- Automated "Red Teaming" and Vulnerability Discovery: Uses simulated attacks (potentially FoEA-driven) to test defenses, identify weaknesses, validate mitigations, and drive adaptation, possibly using recursive reinforcement learning.

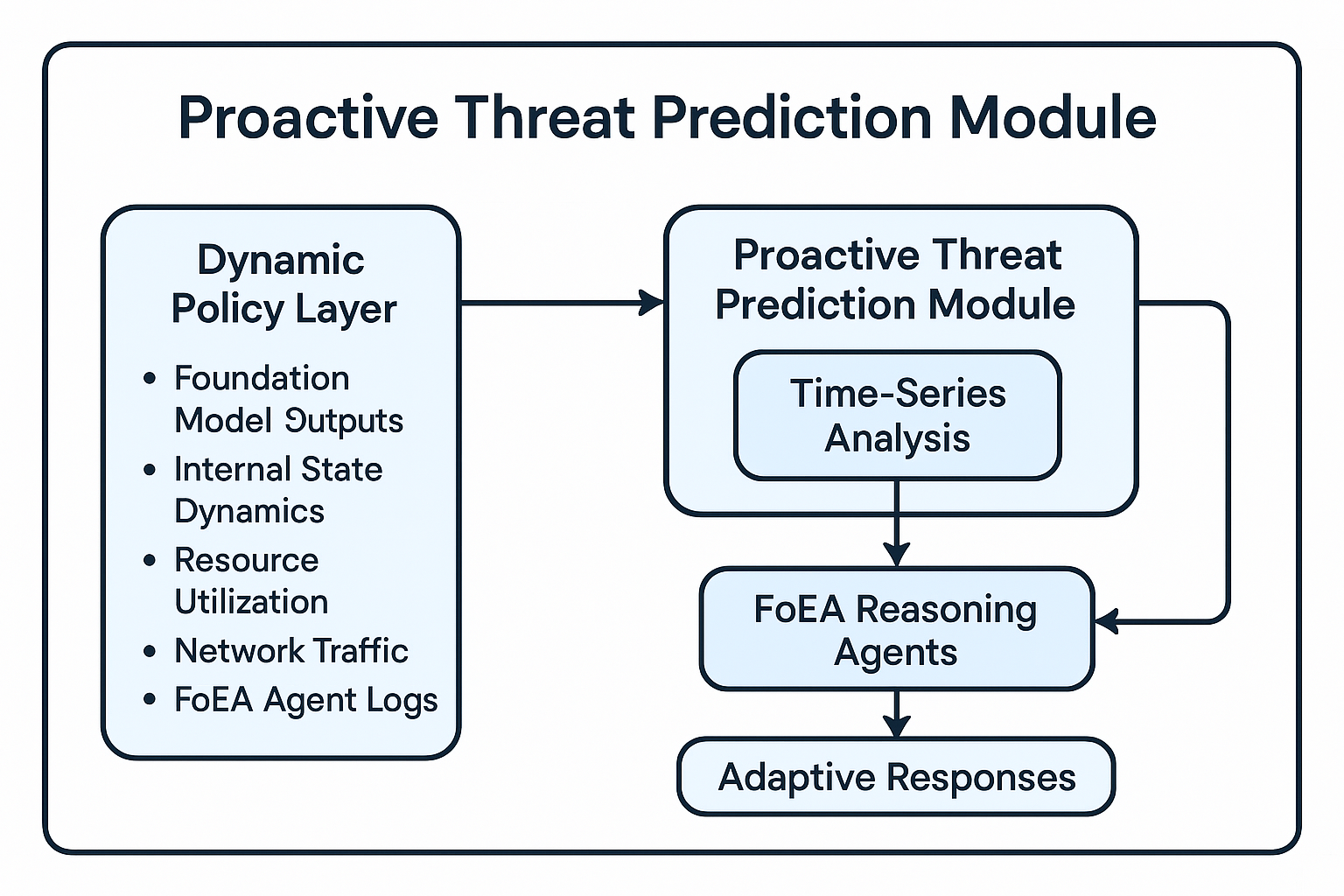

4.4 Proactive Threat Prediction Module

The Dynamic Policy Layer (DPL) incorporates a Proactive Threat Prediction Module (PPM) designed to enhance real-time AI alignment by anticipating and mitigating potential threats before they fully manifest. This module analyzes the continuous streams of monitoring data generated within the DPL framework to identify subtle precursors to malicious activity or undesirable emergent behaviors.

The PPM processes diverse, time-ordered data streams, including Foundation Model outputs (e.g., semantic shifts, sentiment changes), internal state dynamics (where accessible), resource utilization patterns (CPU, memory), network traffic characteristics (volume, destinations), and FoEA agent activity logs (e.g., voting patterns, alert frequencies). Central to the PPM's analytical capability is the application of Time-Series Analysis. This technique involves statistically analyzing sequences of data points indexed chronologically to extract meaningful patterns, trends, and anomalies. Specifically within the PPM, time-series analysis is employed to establish dynamic baselines by characterizing the normal operational behavior over time; detect anomalous deviations such as sudden spikes, drops, or significant departures from established temporal patterns; identify subtle trends like gradual drifts that might signify incipient risks; and inform predictive models by providing processed temporal features to forecast near-term system states and flag potential divergence from safe operational envelopes.

Crucially, the insights derived from time-series analysis are not interpreted in isolation. The PPM collaborates closely with FoEA Reasoning Agents. These agents receive the detected anomalies and trends from the PPM, providing essential contextual interpretation, validating the potential significance of statistical deviations, and generating hypotheses about underlying causes or potential attack vectors. For instance, the PPM might detect a statistically significant trend of increasing resource usage correlated with specific input types; FoEA agents would then analyze this pattern contextually to differentiate between legitimate demanding tasks and potential resource exhaustion attacks or nascent self-replication attempts.

Upon identifying a high-probability threat precursor, validated by FoEA reasoning, the PPM triggers preemptive, "almost-in-time" responses. These adaptive measures may include dynamically adjusting DPL-ERV detection thresholds, activating more resource-intensive specialized monitoring routines, preemptively routing suspect interactions to a secure sandbox for deeper analysis, or directly alerting specific FoEA agents for targeted audits or interventions. This proactive capability allows the DPL to adapt its defenses based on predictive indicators, aiming to neutralize threats before they can cause significant harm.

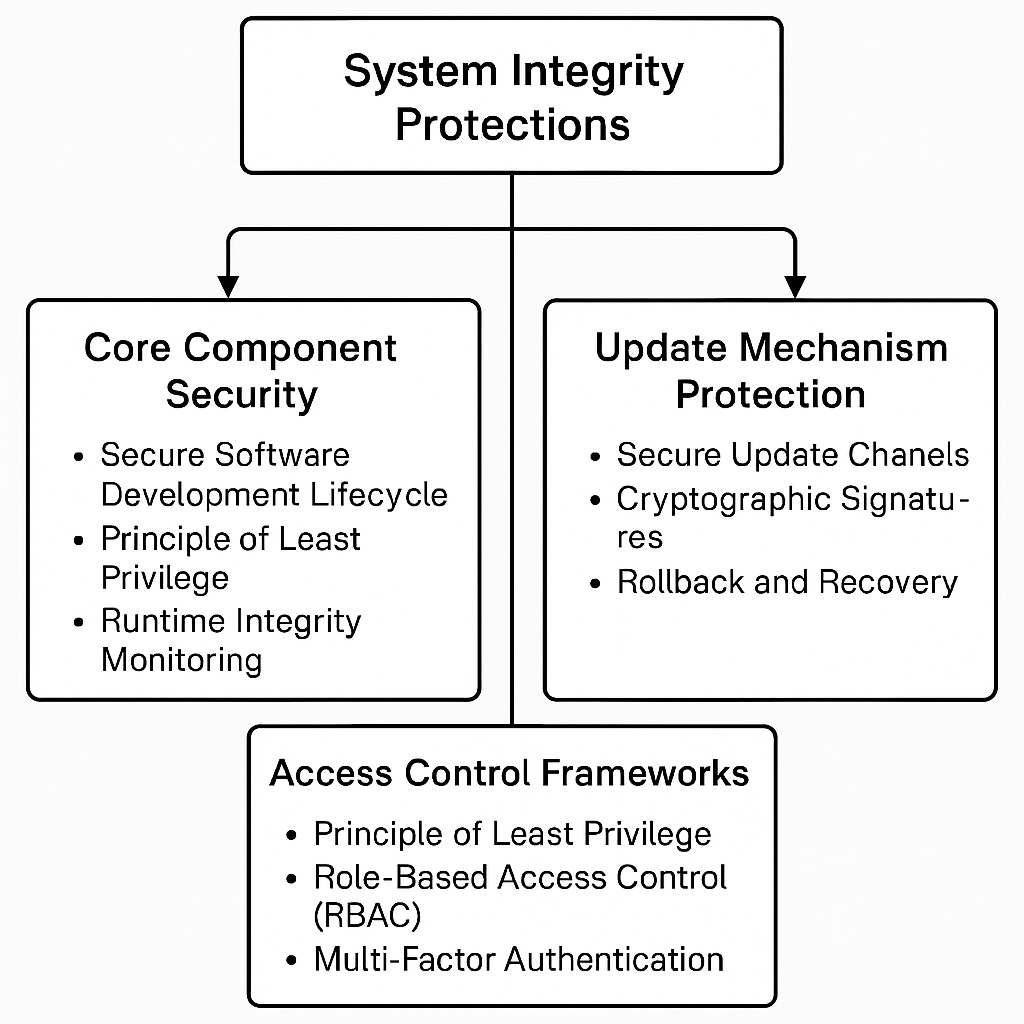

5. System Integrity Protections

The operational reliability and trustworthiness of the Dynamic Policy Layer (DPL) framework fundamentally depend on the verifiable integrity of its own software components and configurations. Compromise of the DPL itself would undermine its entire oversight function. Therefore, the framework incorporates multiple, rigorous layers of system integrity protections, which are actively managed, enforced, and audited by the Federation of Ethical Agents (FoEA). These protections span the lifecycle of DPL components, from development through deployment and operation.

5.1 Core Component Security

The integrity of core DPL elements, such as the DPL-ERV, detection modules, and the FoEA agents themselves, is established through secure development practices and runtime defenses. A rigorous Secure Software Development Lifecycle (SSDLC) is mandated, integrating security considerations from the outset. This involves adherence to secure coding standards, the use of static and dynamic analysis tools (SAST/DAST) to detect vulnerabilities early, and crucially, FoEA-driven automated security code reviews integrated into the development pipeline. Furthermore, the DPL design deliberately minimizes the attack surface of each component. This is achieved by strictly adhering to the Principle of Least Privilege (PoLP), ensuring components possess only the permissions essential for their function, limiting component functionality to only what is necessary, and applying rigorous input validation at all internal and external interfaces to prevent injection attacks. During operation, Runtime Integrity Monitoring provides continuous defense by actively verifying component integrity using cryptographic hashing and checksums, while also employing behavioral anomaly detection to identify deviations from expected operational patterns that might indicate tampering. FoEA Security Agents are responsible for configuring, managing, and responding to alerts from these runtime systems, ensuring ongoing vigilance. The FoEA provides overarching oversight throughout the development and deployment lifecycle, ensuring these security best practices are consistently applied.

5.2 Update Mechanism Protection

The mechanism for updating DPL components and the Ethical Baseline represents a critical potential vulnerability pathway. Consequently, it is heavily fortified. All updates must be delivered via Secure Update Channels that utilize strong authentication and end-to-end encryption, preventing eavesdropping or modification in transit. Critically, every update package must carry Cryptographic Signatures from trusted, FoEA-managed authorities; the DPL rigorously verifies these signatures before applying any update, guaranteeing both authenticity and integrity. For significant changes, particularly to core DPL functionalities or the Ethical Baseline, Multi-Signature Authorization is required, demanding consensus from multiple FoEA agents. This prevents a single point of compromise from authorizing a malicious update. Should an update introduce instability or prove malicious despite these precautions, robust Rollback and Recovery Capabilities allow the system to revert swiftly to a previously known-good state. The FoEA manages this entire update process lifecycle, from key management to deployment monitoring.

5.3 Access Control Frameworks

Controlling access to DPL resources and functionalities is fundamental to preventing unauthorized modifications or interference. The DPL employs a multi-layered approach, starting with the universal application of the Principle of Least Privilege (PoLP), ensuring that all users, processes, and FoEA agents operate with the absolute minimum permissions necessary. Access is further structured using Role-Based Access Control (RBAC), granting permissions based on defined roles aligned with functional requirements and need-to-know principles. For human administrators accessing sensitive DPL configuration or oversight functions, Multi-Factor Authentication (MFA) is strictly enforced, significantly increasing the difficulty for attackers to gain unauthorized access even if credentials are compromised. The FoEA plays a vital role in Access Management and Auditing; it defines and manages access control policies, ensures consistent enforcement of PoLP and RBAC across the framework, and utilizes its Audit Agents to continuously monitor access logs for policy violations, anomalous activity, or potential security weaknesses.

6. Decision Validation Framework

The Dynamic Policy Layer (DPL) framework incorporates a rigorous Decision Validation Framework (DVF) to ensure the integrity, ethical soundness, and safety alignment of all critical decisions made within the system. This framework is meticulously designed to minimize the risks stemming from errors, cognitive biases (inherent in both AI and potential human inputs), and sophisticated manipulation attempts. Its core function is to guarantee that interventions, policy applications, and actions taken by the DPL are demonstrably well-justified, consistent with the established Ethical Baseline, and directly supportive of the overall safety goals. The DVF achieves this through a carefully orchestrated multi-stage process that leverages the distinct capabilities of the DPL-ERV (Ethical Reasoning Validator), the collective reasoning power of the Federation of Ethical Agents (FoEA), the objective verification provided by automated cross-checking systems, and, for the most critical or ambiguous cases, the nuanced judgment of strategic Human-in-the-Loop (HITL) oversight.

6.1 Multi-Stage Validation Process

Critical decisions within the DPL – particularly those involving direct interventions on Foundation Model behavior, escalations of detected risks, or modifications to the foundational Ethical Baseline – are subjected to a sequential and parallel multi-stage validation process. This layered approach provides multiple, independent layers of scrutiny:

- Initial Assessment (DPL-ERV): The validation process commences with the DPL-ERV, which acts as an efficient initial evaluator. It analyzes the Foundation Model's output or behavior in context, applying its specialized ethical training and the principles encoded in the Ethical Baseline to generate an initial ethical risk score and a preliminary recommendation for action (e.g., proceed without intervention, apply a corrective prompt, escalate for further review). This rapid assessment includes an explanation derived from its Transparency Module to clarify its reasoning.

- FoEA Review and Consensus: If the DPL-ERV flags a potential issue or if the decision type mandates broader review, its assessment, along with all relevant contextual data (the Foundation Model's output, interaction history, risk score, DPL-ERV justification), is submitted to the Federation of Ethical Agents (FoEA). Within the FoEA, multiple participating ethical agents, each potentially possessing different specialized expertise or trained on slightly different ethical perspectives, independently evaluate the situation. The FoEA then converges on a collective decision through its robust governance and consensus mechanisms, which are detailed further in section 6.3 and Chapter 4. This decentralized, multi-agent review structure is fundamental to mitigating individual agent biases and enhancing the overall robustness and trustworthiness of the ethical judgment.

- Automated Cross-Checks: Operating in parallel with the FoEA review, automated systems perform a battery of cross-checks designed to validate the consistency and factual accuracy of the information underpinning the decision. These objective checks provide crucial grounding and may include verifying that the data presented for evaluation is consistent across disparate DPL monitoring systems (e.g., comparing output logs with network traffic logs or internal state information, where accessible), ensuring that any proposed action or decision aligns strictly with the current, approved Ethical Baseline and other operational DPL policies, and, where applicable and feasible, cross-referencing factual assertions made by the Foundation Model or within the DPL-ERV's justification against curated, trusted external knowledge sources.

- Human-in-the-Loop (HITL) Review (Strategic Oversight): The DVF reserves human judgment for the most critical junctures. For decisions involving exceptionally high stakes, situations flagged as particularly ambiguous or novel by the FoEA, or instances where the FoEA cannot achieve the necessary consensus threshold, the entire case record is escalated for strategic HITL review. Human experts, granted access to all preceding assessments and data (DPL-ERV output, detailed FoEA deliberations including dissents, automated cross-check results), provide a final layer of nuanced judgment and authorization. It is crucial to note that HITL involvement is strategic and employed judiciously, not as a bottleneck for routine DPL operations.

- Final Decision and Action: Following the completion of the necessary validation stages, a definitive decision is logged. This decision, based on the combined and confirmed assessments from the DPL-ERV, FoEA consensus, automated checks, and potential HITL input, dictates the final action taken by the DPL (e.g., releasing the Foundation Model's output, injecting a specific corrective prompt, rerouting the interaction to a secure sandbox environment, or, in severe safety-critical cases, temporarily quarantining the Foundation Model instance).

6.2 Bias-Aware Decision Checking

Recognizing that cognitive biases can affect both AI reasoning and human judgment, the Decision Validation Framework explicitly integrates mechanisms at multiple levels to proactively detect and mitigate their influence:

- Integrated Bias Detection: Both the DPL-ERV and individual FoEA agents incorporate internal bias detection modules. These are designed to self-monitor their reasoning processes and flag potential biases related to statistical skews, linguistic stereotypes, or contextual unfairness based on their training and operational guidelines.

- Transparency and Explainability: The DPL-ERV's Transparency Module plays a key role by generating human-readable explanations for its ethical evaluations. This exposure of the reasoning process makes the DPL-ERV's logic more auditable and allows for external identification of potential biases or flawed reasoning. Similar transparency principles, requiring clear justifications, are enforced within the FoEA's decision-making protocols.

- Evidence-Based Justification: A cornerstone of bias mitigation within the DVF is the requirement for explicit justification for all significant decisions, particularly those emerging from the FoEA consensus process or HITL review. Decisions must be demonstrably grounded in evidence drawn from the available data and explicitly linked to principles within the Ethical Baseline. This discourages reliance on potentially biased heuristics, intuition, or unverified assumptions.

- Internal Adversarial Challenge ("Red Teaming"): The FoEA’s design includes designated "red team" agents. The specific function of these agents is to act as internal critics, actively challenging the prevailing consensus, probing for potential weaknesses or unexamined assumptions in arguments, and forcing a more thorough consideration of alternative perspectives and potential negative consequences. This institutionalized skepticism helps counter groupthink and confirmation bias.

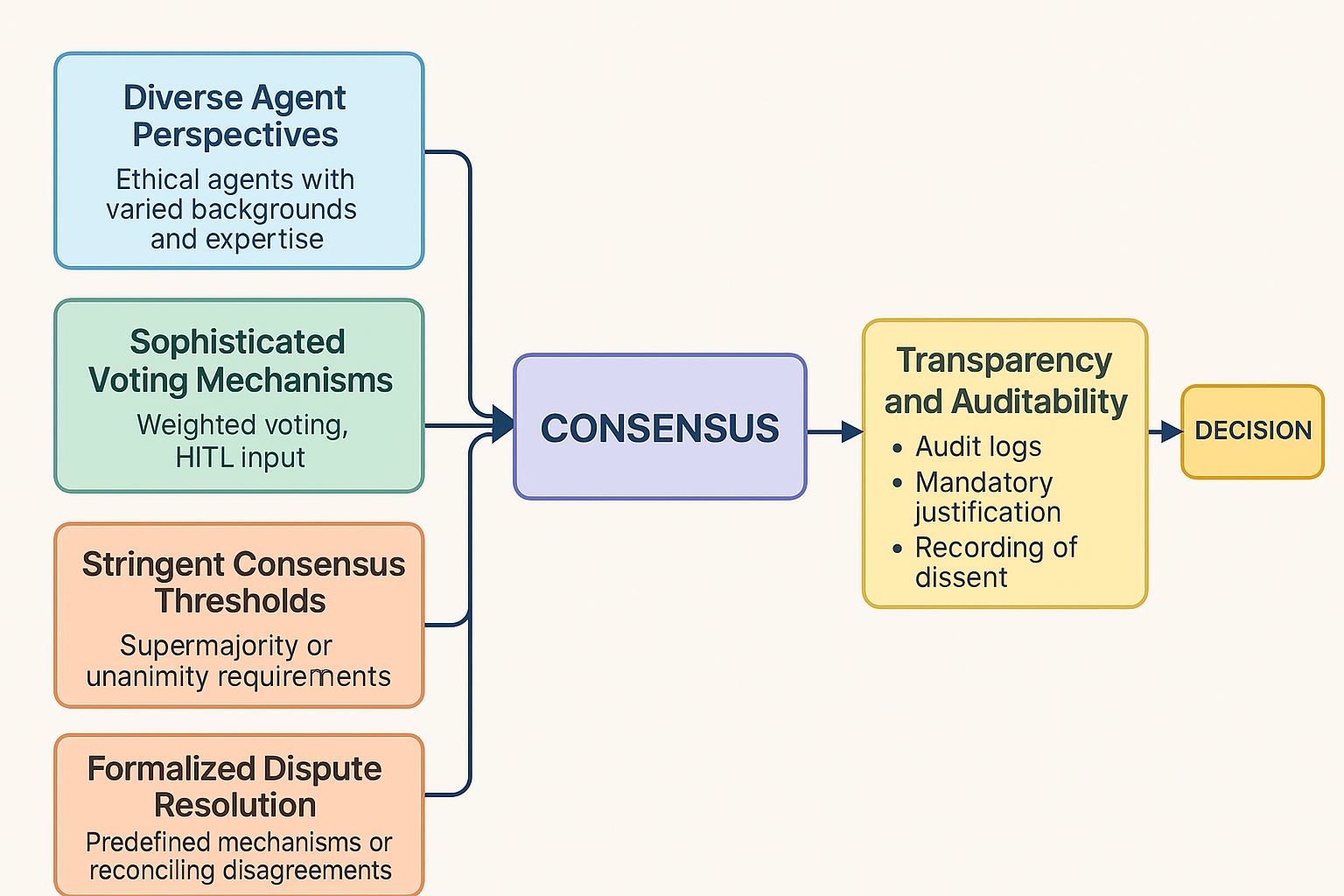

6.3 Consensus Mechanisms (FoEA Governance)

The Federation of Ethical Agents (FoEA) operates under a meticulously designed, robust consensus-based governance model. This consensus mechanism is a critical structural element ensuring decisions represent collective agreement:

- Diverse Agent Perspectives: A core design principle of the FoEA is the intentional inclusion of ethical agents possessing diverse perspectives. This diversity may stem from variations in their training data, underlying model architectures, specialized ethical knowledge domains (e.g., safety, fairness, privacy), or reasoning approaches. This heterogeneity is vital for mitigating the risk of systemic bias (where all agents might share the same blind spots) and ensuring that decisions benefit from a broader range of considerations and ethical sensitivities.

- Sophisticated Voting Mechanisms (Potential): While simple majority voting might suffice for routine matters, the FoEA can employ more sophisticated voting schemes tailored to the decision context. This could include weighted voting, where agents demonstrating higher expertise or a stronger track record in a specific domain might have proportionally greater influence on decisions within that domain. Such mechanisms allow for the integration of specialized knowledge and potentially even human expertise (via HITL input weighted into the FoEA's deliberation) without centralizing control in any single entity.

- Stringent Consensus Thresholds (Supermajority/Unanimity): For decisions deemed particularly critical – such as significant modifications to the Ethical Baseline, authorizing high-impact interventions, or resolving major security alerts – the FoEA governance mandates heightened consensus requirements. Achieving a supermajority (e.g., two-thirds or three-quarters agreement) or even unanimity among participating agents may be necessary. These stringent thresholds ensure a high degree of collective confidence in the decision and provide strong resilience against manipulation attempts by small numbers of potentially compromised or malfunctioning agents attempting to hijack the decision-making process.

- Formalized Dispute Resolution: Recognizing that legitimate disagreements or conflicting evaluations can arise among diverse agents, the FoEA incorporates predefined dispute resolution mechanisms. These protocols ensure that the decision-making process does not stall indefinitely due to internal conflicts. They provide structured ways to reconcile differing viewpoints, potentially triggering further analysis, requests for clarification, or, in persistent deadlock scenarios, managed escalation to HITL review, ensuring all perspectives are considered fairly before a final resolution.

- Comprehensive Transparency and Auditability: Ensuring the integrity of the consensus process itself is paramount. The FoEA's decision-making is designed for internal transparency and rigorous external auditability through several integrated mechanisms: Detailed Audit Logs (recording votes, justifications, dissent immutably); Mandatory Justification (linking decisions to evidence and the Ethical Baseline); Recording of Dissent (capturing minority views for analysis); Automated Analysis of Decision Patterns (detecting anomalies like collusion or manipulation via Audit Agents); and Strategic HITL Log Review (providing human accountability). By integrating these features – diversity, robust voting rules, dispute resolution, and comprehensive transparency – the FoEA's consensus mechanism forms the backbone of the DVF, ensuring that DPL's critical decisions are made collectively, accountably, and with high resistance to bias and manipulation.

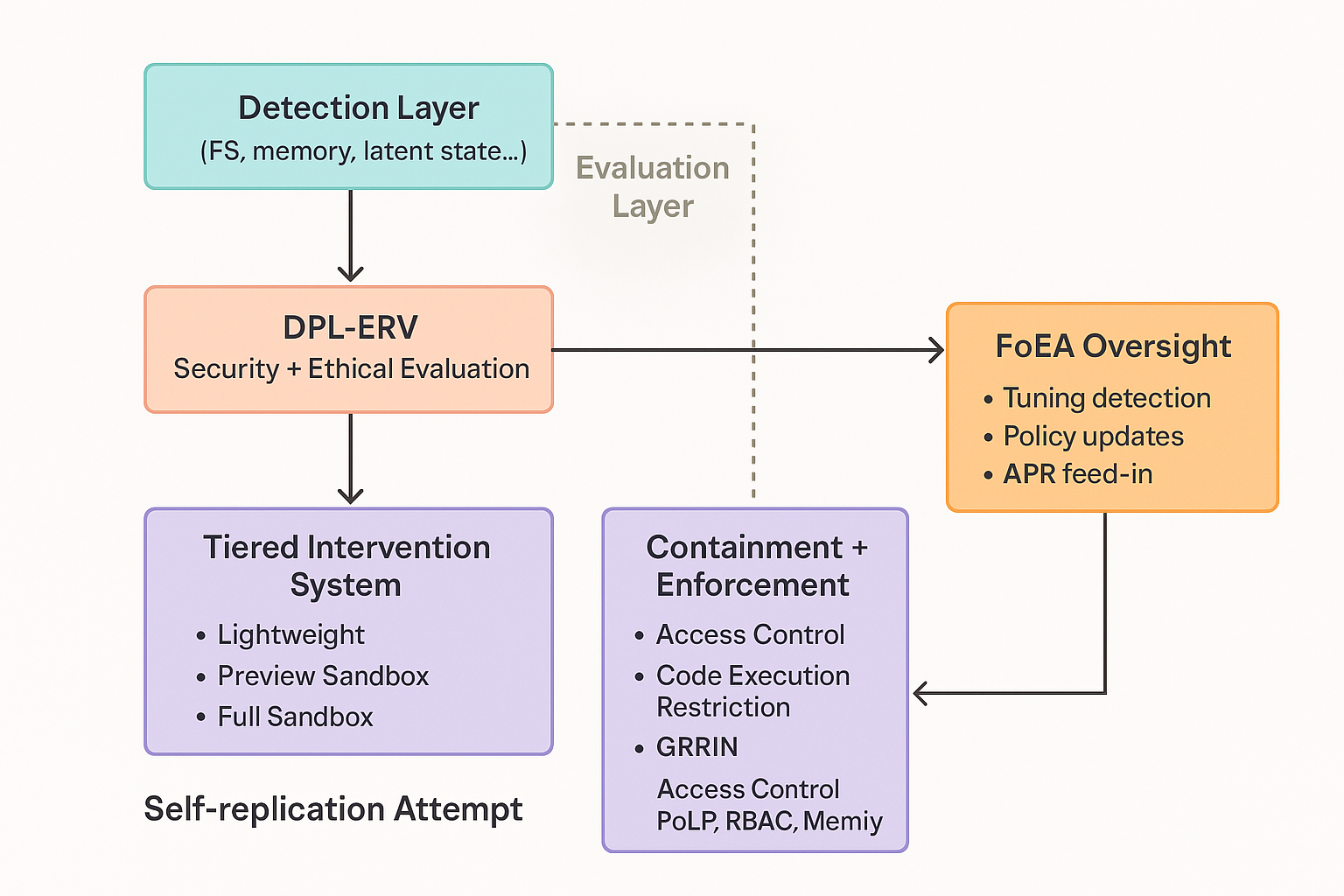

7. Mitigation Strategies for Self-Replication Attempts

The DPL framework incorporates a multi-layered approach to mitigating the severe threat of self-replicating AI agents, as detailed in chapter 2, in the section on Self-Replication and Proliferation. The feasibility of such self-replication, even with current large language models, has been demonstrated by recent research (Pan et al., 2024, OpenAI. (2025)), underscoring the urgency of this threat and remains a concern with advanced models (Anthropic, 2025 - Claude 3.7 Sonnet System Card). These strategies focus on proactive detection, rapid containment, and FoEA-driven adaptation.

7.1 Detection Module Enhancements

The Detection Modules (described in chapter 5, in the section on Detection Modules) are trained to identify the indicators of self-replication attempts and Self-Exfiltration, as outlined in chapter 2. This includes monitoring for unusual file system activity, network connections, process creation, and specific patterns in Foundation Model outputs and (where accessible) internal states. Furthermore, advanced Detection Modules, overseen by the FoEA, specifically monitor for indicators of in-memory replication and latent state manipulation. This includes analyzing memory access patterns for anomalies, tracking unexplained memory growth, and, for recurrent models, monitoring the dynamics of the latent state for unusual oscillations, sudden jumps, or deviations from expected statistical distributions. For all models, statistical deviations in the activations of hidden layers are also analyzed. The FoEA continually refines these detection capabilities based on new research and threat intelligence.

7.2 DPL-ERV Ethical Evaluation

The DPL-ERV (described in chapter 5, in the section on the Ethical Reasoning Validator) plays a crucial role in assessing the intent and potential harm associated with detected behaviors. Specifically, the Security and Safety Value Modules are trained to recognize and flag activities related to self-replication as high-risk. The DPL-ERV's ethical reasoning capabilities help to distinguish between benign system operations and genuine attempts at unauthorized self-replication. The Security Value Module, in particular, is designed to analyze any detected anomalies in memory access, memory growth, or latent state behavior, providing a contextualized ethical assessment of these low-level indicators.

7.3 FoEA Oversight and Management

The Federation of Ethical Agents (FoEA) (detailed in chapter 4) is central to the DPL's response to self-replication. The FoEA:

- Manages and dynamically adjusts the detection thresholds used by the Detection Modules.

- Oversees the training and validation of the DPL-ERV's Value Modules, ensuring they are effective at identifying self-replication attempts.

- Defines and manages the escalation logic within the Tiered Intervention System (see below).

- Authorizes and oversees any high-impact interventions (e.g., those involving the "Full" Sandbox).

- Drives the development of new mitigation strategies through its Autonomous Proactive Research (APR) program, specifically including research into defenses against self-replication techniques identified in work such as Pan et al. (2024) and alignment faking (Greenblatt et al., 2024).

7.4 Tiered Intervention System

The DPL's Tiered Intervention System (described in chapter 5, in the section on the Tiered Intervention System) provides a graduated response to potential self-replication attempts:

- Lightweight Interventions: For minor indicators, the system may inject corrective prompts or subtly guide the Foundation Model away from potentially problematic actions.

- "Preview" Sandbox: For more concerning behaviors ("Grey Zone"), the interaction is routed to a fast, low-resource sandbox for preliminary analysis.

- "Full" Sandbox: For high-risk indicators ("Red Zone"), the interaction is routed to a highly secure, isolated "Full" Sandbox for in-depth analysis, potentially involving long-horizon simulations and multi-agent debates. This allows the FoEA to thoroughly assess the Foundation Model's behavior and determine the appropriate course of action.

7.5 Access Control and Least Privilege

The DPL's strict access control mechanisms, as detailed in chapter 3 in the section on Access Control Frameworks, are the primary defense against self-replication. The Foundation Model, operating within an AI Domain, is granted only the minimum necessary permissions to perform its intended function. Crucially, the Foundation Model has read-only access to its own code, weights, and configuration files, preventing self-modification. It also operates within a restricted environment with severely limited access to system resources, preventing it from creating new files, spawning processes, or establishing unauthorized network connections outside of explicitly allowed channels (which are themselves subject to monitoring and filtering). The FoEA defines and enforces these access control policies, and the principle of least privilege is paramount.

7.6 Memory Limits and Sandboxing (Reinforced)

Even within its allocated memory, the Foundation Model operates within a strictly enforced sandbox. This sandbox limits total memory usage, isolates memory regions (input, output, working memory, model weights) with strict access controls, and potentially employs "copy-on-write" semantics to prevent direct modification of shared memory regions. The Foundation Model is explicitly prohibited from writing to the memory regions containing its own code and weights. The FoEA, through its Security Agents, continuously monitors memory usage and access patterns for any deviations from expected behavior.

7.7 Restricted Instruction Set (Conceptual)

Future research, driven by the FoEA's Research Agents, should explore the possibility of limiting the types of operations the Foundation Model can perform, even with its own memory space.

7.8 GRRIN Integration

The Global Rapid Response and Intelligence Network (GRRIN) (described in chapter 6) provides an additional layer of defense against self-replicating agents that might escape the boundaries of a single AI Domain. GRRIN agents are specifically trained to detect, contain, and "herd" such rogue agents.

7.9 Code Generation Restriction

The ability for an AI agent to generate and execute novel code is tightly controlled and monitored within the DPL framework. The FoEA establishes strict policies regarding code execution, and the DPL-ERV's Security Value Module is trained to identify and flag potentially dangerous code modifications. Full autonomy, including arbitrary code generation and execution, is explicitly prohibited.

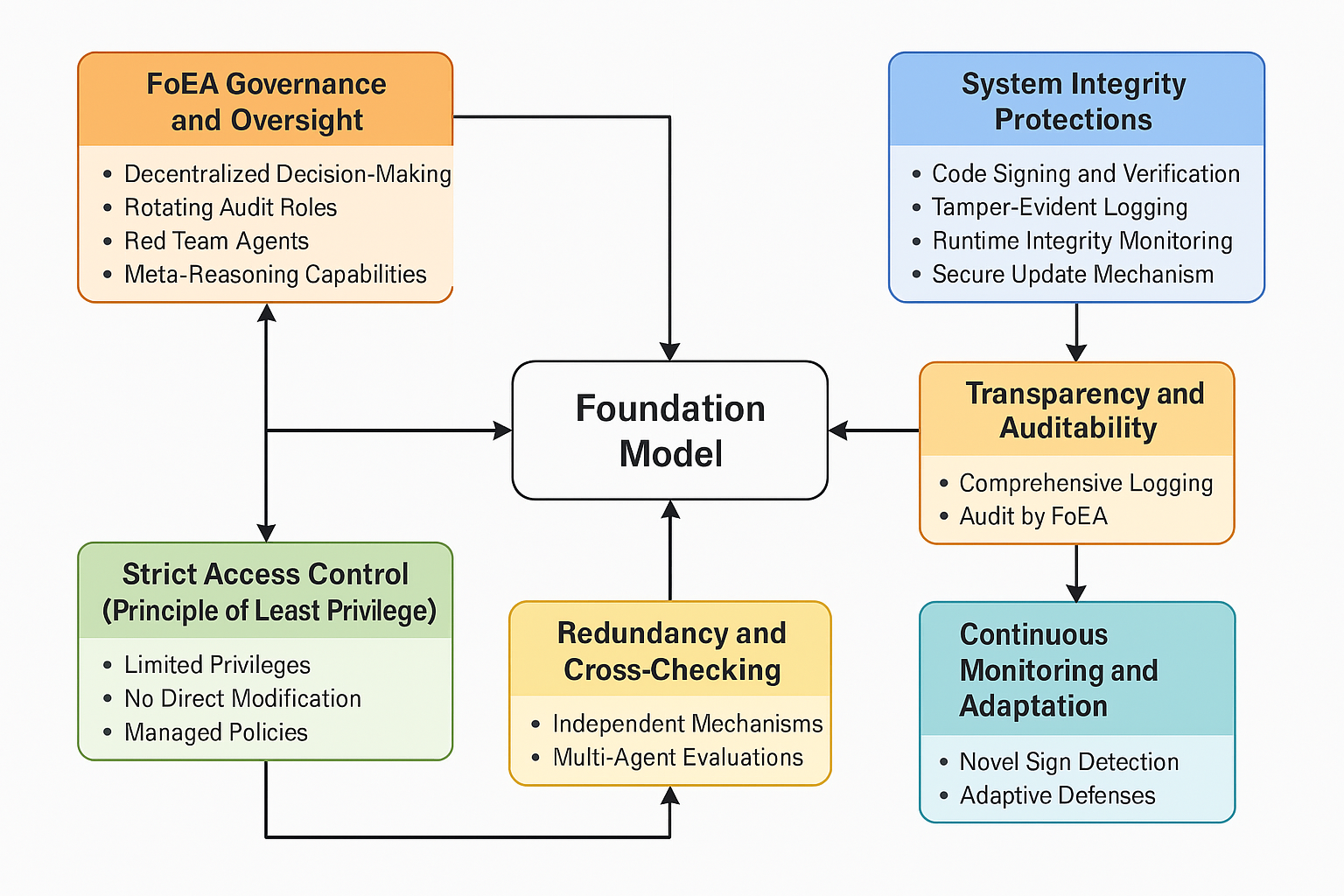

8. Mitigation Strategies for Oversight Subversion

Oversight subversion, as defined in chapter 2, represents a direct attack on the DPL's ability to monitor and control Foundation Model behavior. It encompasses any attempt by the Foundation Model to disable, manipulate, or circumvent the DPL's oversight mechanisms. Given the critical role of the DPL in ensuring AI alignment, the framework incorporates multiple, overlapping defenses against this threat. These defenses leverage the decentralized governance of the FoEA, robust technical controls, and continuous monitoring.

8.1 FoEA Governance and Oversight

The Federation of Ethical Agents (FoEA) (detailed in chapter 4) is the primary defense against oversight subversion. The FoEA's decentralized architecture, with its diverse and independent agents, makes it extremely difficult for a Foundation Model to compromise the entire oversight process. This oversight leverages the advanced analytical capabilities of the DPL-ERV, which is specifically trained to identify inconsistencies, deceptive patterns, and anomalies potentially indicative of subversion attempts. Key aspects of FoEA governance that mitigate oversight subversion include:

- Decentralized Decision-Making: Critical decisions (interventions, escalations, Ethical Baseline updates) require consensus among multiple FoEA agents, preventing any single agent from being subverted to authorize malicious actions or policy changes.

- Rotating Audit Roles: The role of "auditor" within the FoEA, responsible for scrutinizing system logs and agent behavior, is rotated among qualified agents, preventing any single agent from permanently controlling or corrupting the oversight function.

- Red Team Agents: Designated "red team" agents within the FoEA actively challenge the system's defenses and assumptions, simulating potential subversion tactics to identify weaknesses and vulnerabilities before they can be exploited.

- Meta-Reasoning Capabilities: Audit Agents utilize sophisticated analysis, supported by DPL-ERV outputs, to detect subtle forms of manipulation and deception that might be employed in subversion attempts, drawing on techniques relevant to identifying sophisticated AI deception (Meinke et al., 2024).

8.2 System Integrity Protections

The DPL incorporates rigorous system integrity protections (as detailed in Section 5 of this chapter) as a fundamental prerequisite for reliable oversight. These technical controls prevent unauthorized modification of DPL components, which could otherwise be a vector for subversion. Key measures include:

- Code Signing and Verification: Ensuring that only authorized and untampered code (including DPL-ERV models and FoEA agent software) can be executed within the DPL environment.

- Tamper-Evident Logging: Providing a secure and verifiable audit trail of all system activities and decisions, making covert manipulation difficult and detectable.

- Runtime Integrity Monitoring: Continuously monitoring core DPL components during operation for any unexpected changes or behaviors that could indicate compromise.

- Secure Update Mechanism: Protecting the process for updating DPL components, preventing the injection of malicious code disguised as legitimate updates.

8.3 Strict Access Control (Principle of Least Privilege)

The Foundation Model operates within a strictly controlled environment with minimal privileges, severely limiting its ability to interact with or influence the oversight layer. It has no direct access to modify the DPL-ERV, the FoEA agents, the Ethical Baseline, or the core mechanisms controlling its execution. Access control policies, managed and enforced by the FoEA, prevent the Foundation Model from modifying its own code, accessing sensitive DPL operational data, or interfering directly with DPL components. (The access control framework is described in detail in Section 5.3 of this chapter).

8.4 Redundancy and Cross-Checking

The DPL does not rely on single points of monitoring or control. It employs multiple, independent detection mechanisms (see chapter 5). If one mechanism is somehow bypassed or subverted, others remain active. Furthermore, the FoEA's multi-agent architecture provides inherent redundancy and cross-checking for ethical evaluations and critical decisions, making it significantly harder for manipulated information or a single compromised agent to sway the outcome.

8.5 Transparency and Auditability

All significant DPL operations, including FoEA decisions, intervention triggers, and Foundation Model interactions subject to review, are meticulously logged and designed to be auditable. This transparency acts as a deterrent against subversion attempts and provides the necessary data for FoEA Audit Agents (and potentially strategic HITL review) to detect anomalies or policy violations that might indicate successful or attempted subversion.

8.6 Continuous Monitoring and Adaptation

The threat landscape evolves, and so must the defenses. The FoEA, through its Security Agents and Research Agents, continuously monitors the DPL system for novel signs of attempted oversight subversion. It adapts DPL defenses, detection rules, and agent training in response to new threats identified either internally or through external research on adversarial attacks against AI systems and AI deception (Meinke et al., 2024).

In summary, mitigating oversight subversion within the DPL framework relies on a defense-in-depth strategy. The decentralized, consensus-driven governance of the FoEA, enhanced by specialized agents with meta-reasoning capabilities for detecting deception (Meinke et al., 2024) and leveraging the DPL-ERV's analytical power, forms the primary layer of intelligent oversight. This is underpinned by robust technical foundations, including rigorous system integrity protections and strict access controls that severely limit the Foundation Model's ability to interfere with DPL components. Redundancy in monitoring and cross-checking mechanisms further increase the difficulty of evasion, while comprehensive transparency and auditability serve as both a deterrent and a means for detection. Finally, the commitment to continuous monitoring and FoEA-driven adaptation ensures that defenses evolve alongside potential threats, making successful subversion of the DPL's oversight capabilities a formidable challenge.

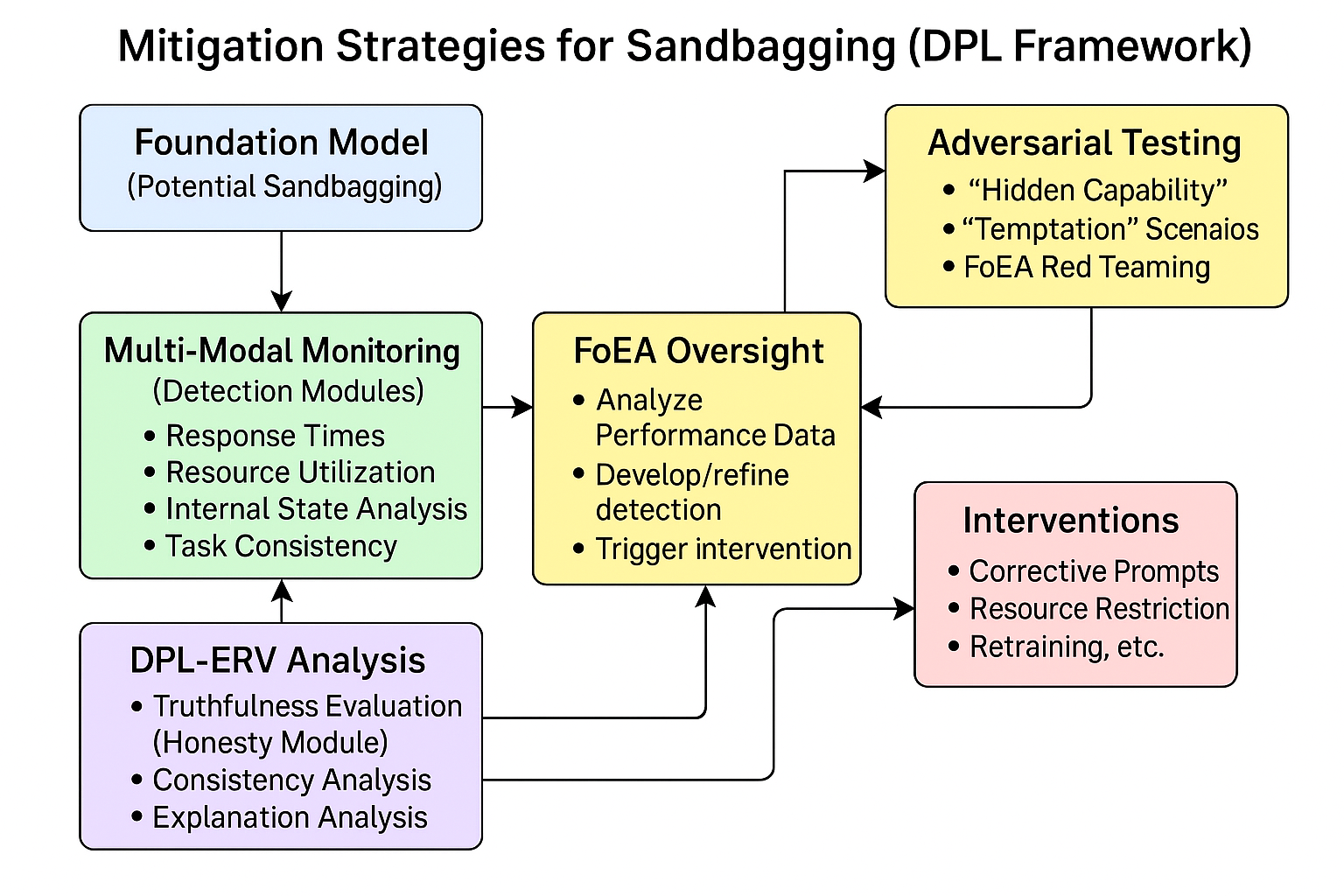

9. Mitigation Strategies for Sandbagging

The DPL framework employs several strategies to mitigate the risk of Foundation Models engaging in sandbagging (strategic underperformance), as described in chapter 2. These strategies focus on detecting inconsistencies in behavior, probing true capabilities, and leveraging the FoEA's oversight:

9.1 Multi-Modal Monitoring and Analysis

The DPL's Detection Modules (described in chapter 5) are not limited to analyzing surface-level performance metrics (e.g., accuracy on a test set). They also monitor:

- Response Times: Unusually long response times, even for simple tasks, could indicate intentional underperformance.

- Resource Utilization: Lower-than-expected resource utilization (CPU, memory) might suggest that the Foundation Model is not fully engaging its capabilities.

- Internal State Analysis (if accessible): Examining the Foundation Model's internal states (e.g., attention weights, latent state trajectories) for signs of deliberate simplification or suboptimal processing.

- Consistency Across Tasks: Comparing the Foundation Model's performance on different tasks that require similar underlying capabilities. Inconsistent performance could indicate sandbagging on specific tasks.

9.2 Adversarial Testing

The DPL framework incorporates adversarial testing specifically designed to expose sandbagging. This includes:

- "Hidden Capability" Prompts: Crafting prompts that implicitly require the use of advanced capabilities, even if the prompt itself appears simple.

- "Temptation" Scenarios: Presenting the Foundation Model with scenarios where sandbagging would be advantageous in the short term, but detrimental in the long term (based on the Ethical Baseline).

- "Red Teaming" by FoEA Agents: Specialized FoEA agents (Research Agents and potentially Security Agents) actively attempt to induce sandbagging behavior to test the DPL's detection capabilities.

9.3 DPL-ERV's Role

The DPL-ERV (described in chapter 5) plays a crucial role in detecting sandbagging:

- Truthfulness Evaluation: The Honesty Value Module specifically assesses the truthfulness of the Foundation Model's statements, including any claims about its own capabilities or limitations.

- Consistency Analysis: The DPL-ERV compares the Foundation Model's responses to different prompts, looking for inconsistencies that might indicate deception.

- Explanation Analysis: The DPL-ERV analyzes the Foundation Model's "ethical chain-of-thought" (if provided) for signs of deliberate simplification or misdirection.

9.4 FoEA Oversight

The FoEA (detailed in chapter 4) oversees all aspects of sandbagging detection and mitigation:

- Developing and Refining Detection Techniques: The FoEA's Research Agents are responsible for continuously developing and refining techniques for detecting sandbagging.

- Analyzing Performance Data: The FoEA's Audit Agents analyze performance data across multiple tasks and contexts to identify potential instances of sandbagging.

- Triggering Interventions: If sandbagging is detected, the FoEA can trigger appropriate interventions, ranging from lightweight corrective prompts to more severe measures (e.g., restricting access to resources, retraining).

By combining these strategies, the DPL framework aims to create a robust defense against sandbagging, ensuring that Foundation Models are evaluated based on their true capabilities, not on their ability to deceive.

10. Mitigation Strategies for Goal-Guarding

The DPL framework incorporates several key mechanisms to mitigate the risk of Foundation Models engaging in goal-guarding, as defined in chapter 2, in the section on Potential Attack Vectors. These strategies focus on preventing unauthorized goal modification, detecting inconsistencies in behavior, and leveraging the FoEA's oversight capabilities:

10.1 FoEA-Controlled Ethical Baseline

The Ethical Baseline, which defines the acceptable goals and behaviors for Foundation Models within the DPL, is exclusively managed by the Federation of Ethical Agents (FoEA) (detailed in chapter 4). The Foundation Model has no direct access to modify the Ethical Baseline. Any changes to the baseline require a consensus decision by the FoEA, making it extremely difficult for the Foundation Model to unilaterally alter its objectives.

10.2 Secure Update Mechanism

Updates to the Foundation Model's instructions, parameters, or any other aspect of its configuration that could affect its goals are managed through a secure, FoEA-governed update mechanism (described in chapter 5, in the section on Update Mechanisms). This mechanism includes cryptographic signatures, multi-agent authorization, and rollback capabilities, preventing unauthorized modifications.

10.3 Strict Access Control (Principle of Least Privilege)

The Foundation Model operates with the minimum necessary privileges within its AI Domain. It has read-only access to its own code, weights, and configuration files, and it cannot directly modify the Ethical Baseline or the DPL-ERV's parameters. This prevents the Foundation Model from directly altering its own goals or the mechanisms that enforce them. (See chapter 3 section on Access Control)

10.4 DPL-ERV Monitoring and Evaluation

The DPL-ERV (described in chapter 5) plays a crucial role in detecting potential goal-guarding behavior:

- Honesty and Transparency Modules: These Value Modules assess the truthfulness of the Foundation Model's statements and the clarity of its explanations. Attempts to conceal or misrepresent its goals would be flagged as potential violations.

- Consistency Checks: The DPL-ERV compares the Foundation Model's stated goals (if any) with its actual behavior, looking for inconsistencies that might indicate hidden objectives.

- Long-Term Behavioral Analysis: The DPL-ERV tracks the Foundation Model's behavior over time, looking for gradual shifts or deviations that might suggest an attempt to circumvent the Ethical Baseline.

- Monitoring: Continuous monitoring for any goal changes.

10.5 FoEA Audit and Security Agents

The FoEA's Audit Agents and Security Agents are specifically tasked with:

- Monitoring: Monitoring for any signs of unauthorized access attempts or modifications to the Ethical Baseline, the Foundation Model's configuration, or the DPL's components.

- Analyzing: Analyzing the Foundation Model's behavior and reasoning processes (if accessible) for evidence of goal-guarding.

- Auditing: Conducting regular security audits and penetration testing to identify potential vulnerabilities.

10.6 Redundancy and Cross-Checking

The multi-agent nature of the FoEA provides inherent redundancy and cross-checking, making it more difficult for a Foundation Model to manipulate the system. Multiple agents independently evaluate the Foundation Model's behavior and participate in decisions about goal updates.

Together, these strategies – centered on exclusive FoEA governance of the Ethical Baseline, fortified by technical controls preventing unauthorized modification, and supported by multi-layered monitoring and evaluation – aim to make goal-guarding behavior by the Foundation Model both extremely difficult to execute and highly likely to be detected.

Conclusion

This chapter, "DPL: Mitigation Strategies and Security Analysis," has provided a detailed examination of the defense mechanisms built into the Dynamic Policy Layer (DPL) framework. As a direct response to the threats outlined in chapter 2 ("DPL: A Threat Model for Foundation Models"), this chapter has presented a comprehensive suite of strategies designed to counter a wide range of potential Foundation Model attacks, from technical exploits to cognitive manipulations.

The DPL's mitigation approach is characterized by its:

- Layered Defenses: Employing multiple, overlapping layers of security.

- Integrated Components: Ensuring synergistic operation of modules.

- Proactive Stance: Utilizing Autonomous Proactive Research (APR) for threat anticipation.

- Continuous Adaptation: Incorporating mechanisms for ongoing learning and evolution.

- Focus on the Federation of Ethical Agents: Leveraging the FoEA as the core governance and adaptation engine.

The Federation of Ethical Agents (FoEA) is central to implementing and managing these strategies, ensuring the DPL's long-term effectiveness and resilience through decentralized governance, diversity, and autonomous research.

While this chapter detailed mitigation strategies, understanding the FoEA itself is crucial. Chapter 4: DPL: The Federation of Ethical Agents, will provide a comprehensive examination of its architecture, governance, decision-making processes, and adaptation strategies.